AI completion¶

marimo comes with GitHub Copilot, a tool that helps you write code faster by suggesting in-line code suggestions based on the context of your current code.

marimo also comes with the ability to use AI for refactoring a cell, finishing writing a cell, or writing a full cell from scratch. This feature is currently experimental and is not enabled by default.

GitHub Copilot¶

The marimo editor natively supports GitHub Copilot, an AI pair programmer, similar to VS Code.

Get started with Copilot:

Install Node.js.

Enable Copilot via the settings menu in the marimo editor.

Note: Copilot is not yet available in our conda distribution; please install

marimo using pip if you need Copilot.

Codeium Copilot¶

Go to the Codeium website and sign up for an account: https://codeium.com/

Install the browser extension: https://codeium.com/chrome_tutorial

Open the settings for the Chrome extension and click on “Get Token”

Right-click on the extension window and select “Inspect” to open the developer tools for the extension. Then click on “Network”

Copy the token and paste it into the input area, and then press “Enter Token”

This action will log a new API request in the Network tab. Click on “Preview” to get the API key.

Paste the API key in the marimo settings in the UI, or add it to your

marimo.tomlfile as follows:

[completion]

copilot = "codeium"

codeium_api_key = ""

Alternative: Obtain Codeium API key using VS Code¶

Go to the Codeium website and sign up for an account: https://codeium.com/

Install the Codeium Visual Studio Code extension (see here for complete guide)

Sign in to your Codeium account in the VS Code extension

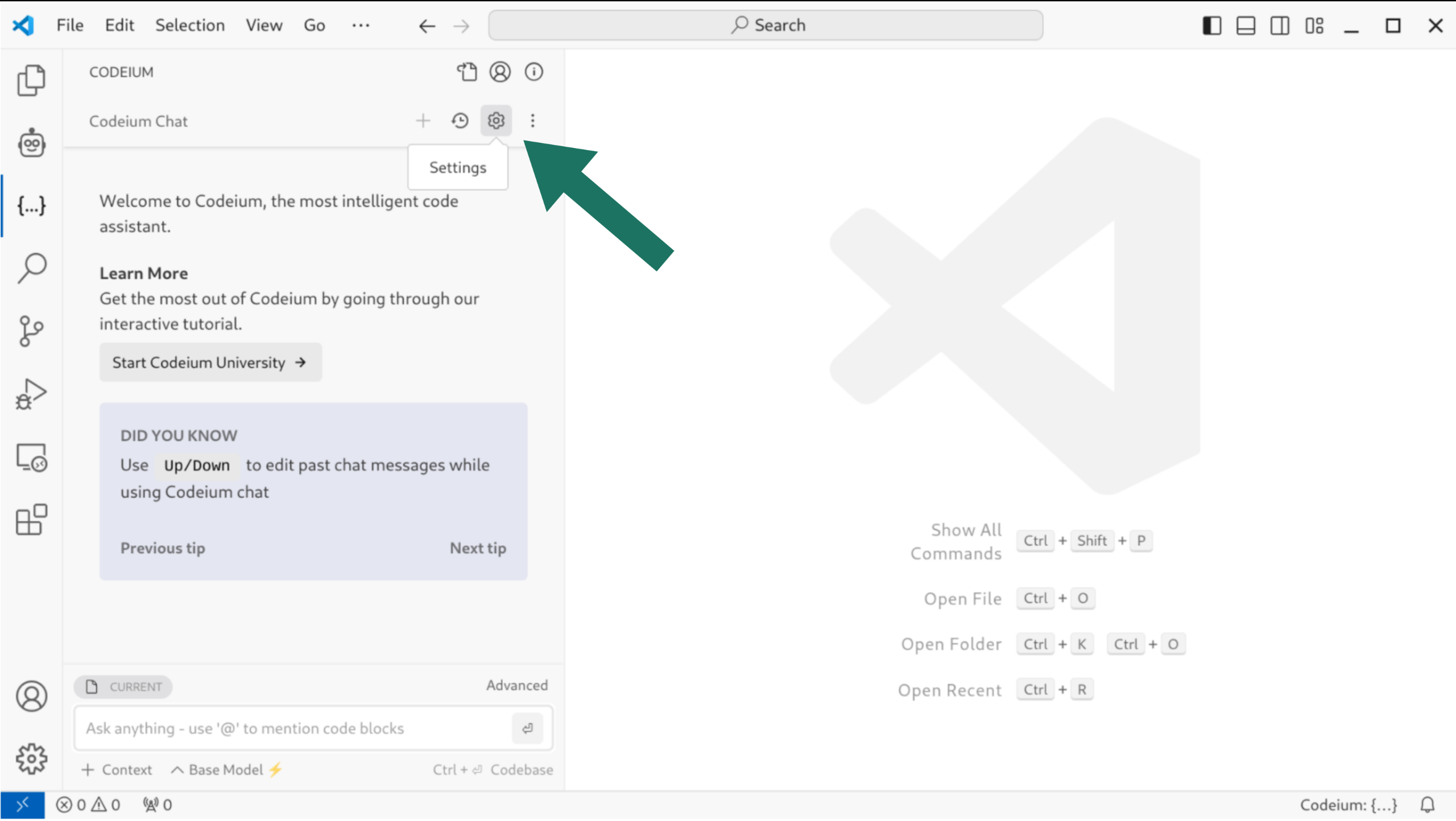

Select the Codeium icon on the Activity bar (left side), which opens the Codeium pane

Select the Settings button (gear icon) in the top-right corner of the Codeium pane

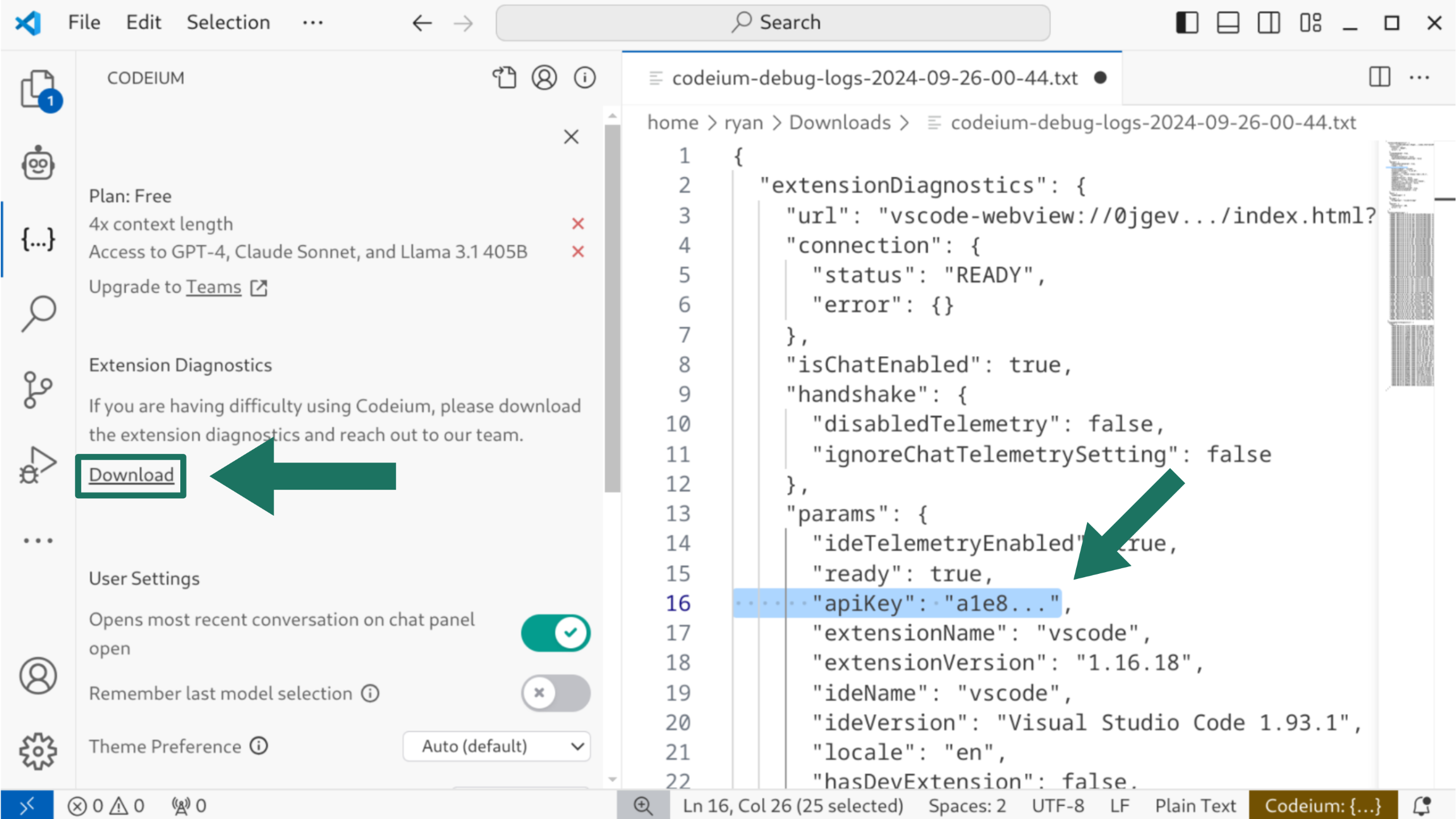

Click the Download link under the Extension Diagnostics section

Open the diagnostic file and search for

apiKey

Copy the value of the

apiKeyto.marimo.tomlin your home directory

[completion]

codeium_api_key = "a1e8..." # <-- paste your API key here

copilot = "codeium"

activate_on_typing = true

Generate code with our AI assistant¶

marimo has built-in support for generating and refactoring code with AI, with a variety of providers. marimo works with both hosted AI providers, such as OpenAI and Anthropic, as well as local models served via Ollama.

Below we describe how to connect marimo to your AI provider. Once enabled, you

can generate entirely new cells by clicking the “Generate with AI” button at

the bottom of your notebook. You can also refactor existing cells by inputting

Ctrl/Cmd-Shift-e in a cell, opening an input to modify the cell using AI.

Using OpenAI¶

Install openai:

pip install openaiAdd the following to your

marimo.toml:

[ai.open_ai]

# Get your API key from https://platform.openai.com/account/api-keys

api_key = "sk-proj-..."

# Choose a model, we recommend "gpt-4-turbo"

model = "gpt-4-turbo"

# Change the base_url if you are using a different OpenAI-compatible API

base_url = "https://api.openai.com/v1"

Using Anthropic¶

To use Anthropic with marimo:

Sign up for an account at Anthropic and grab your Anthropic Key.

Add the following to your

marimo.toml:

[ai.open_ai]

model = "claude-3-5-sonnet-20240620"

# or any model from https://docs.anthropic.com/en/docs/about-claude/models

[ai.anthropic]

api_key = "sk-ant-..."

Using other AI providers¶

marimo supports OpenAI’s GPT-3.5 API by default. If your provider is compatible with OpenAI’s API, you can use it by changing the base_url in the configuration.

For other providers not compatible with OpenAI’s API, please submit a feature request or “thumbs up” an existing one.

Using local models with Ollama¶

Ollama allows you to run open-source LLMs (e.g. Llama 3.1, Phi 3, Mistral, Gemma 2) on your local machine. To integrate Ollama with marimo:

Download and install Ollama.

Download the model you want to use:

ollama pull llama3.1We also recommend

codellama(code specific).

Start the Ollama server:

ollama run llama3.1Visit http://localhost:11434 to confirm that the server is running.

Add the following to your

marimo.toml:

[ai.open_ai]

api_key = "ollama" # This is not used, but required

model = "llama3.1" # or the model you downloaded from above

base_url = "http://localhost:11434/v1"

Using Google AI¶

To use Google AI with marimo:

Sign up for an account at Google AI Studio and obtain your API key.

Install the Google AI Python client:

pip install google-generativeaiAdd the following to your

marimo.toml:

[ai.open_ai]

model = "gemini-1.5-flash"

# or any model from https://ai.google.dev/gemini-api/docs/models/gemini

[ai.google]

api_key = "AI..."

You can now use Google AI for code generation and refactoring in marimo.